Recovering vision to the blind, restoring motor function to people with disabilities -- what's the science behind it all? Seif Eldawlatly, associate professor of computer science and engineering, is unlocking new possibilities for people with disabilities and others through his research in the futuristic field of brain-computer interfaces (BCIs).

Now, after 18 years of work in the field, Eldawlatly is helping AUC develop its expertise in BCIs and exploring opportunities to improve everyone's lives. "This field is relatively new, and not many people in Egypt or the region actually work in this research area," he says.

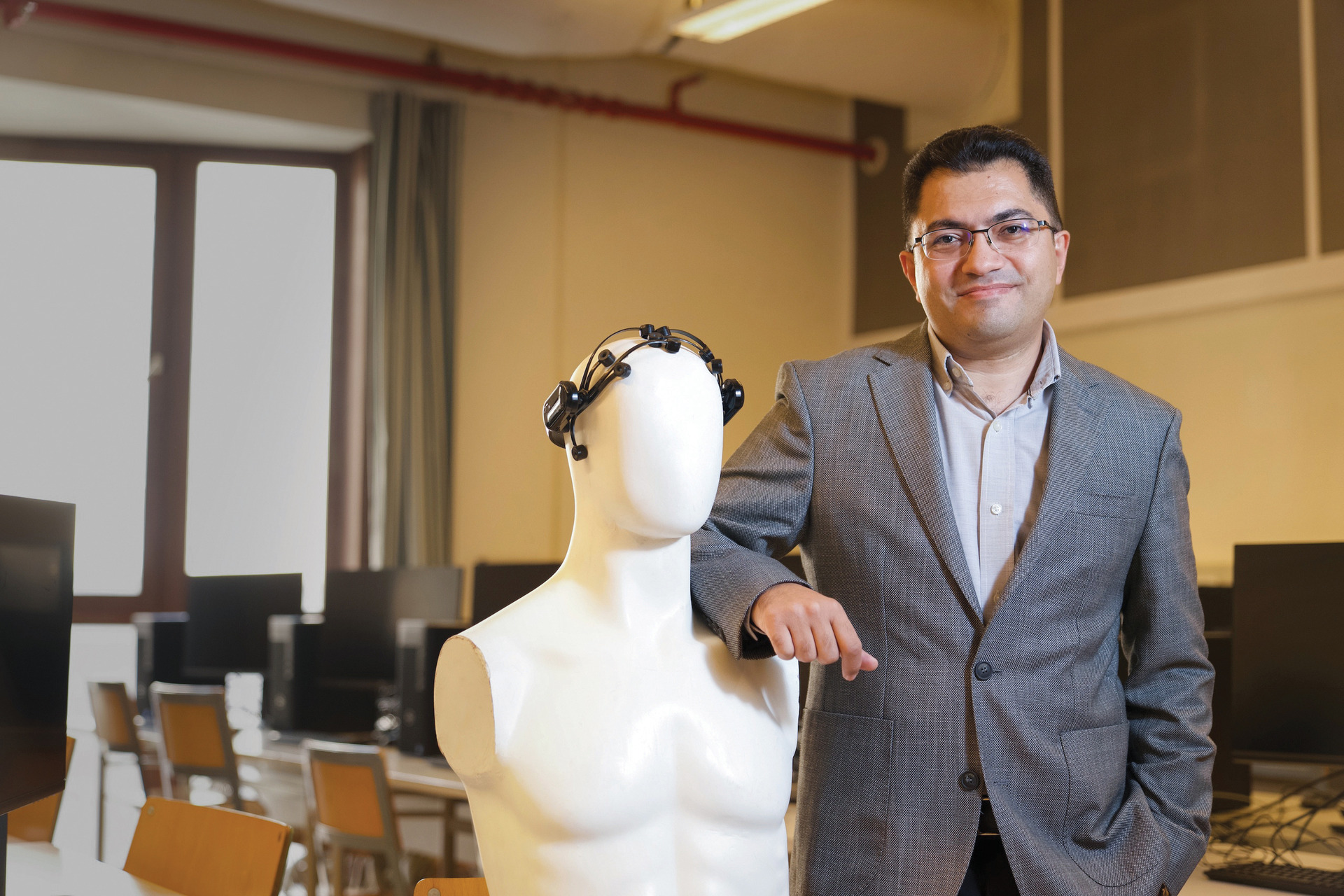

Associate Professor Seif Eldawlatly is introducing the cutting-edge field of brain-computer interfaces to AUC

Technological Trajectory

Eldawlatly's work has evolved alongside the technology. Visual prosthetics work by recreating signals through the brain via implanted electrodes. "Our eyes are almost like a camera taking a picture of what we see, but the actual vision and perception take place in the brain. We understand what we see through the process in our brains," he says. So when the eye malfunctions or stops working due to disease, Eldawlatly says it is possible to control the brain itself by artificially providing the input that was supposed to come from the eye.

A chip attached to wires acting as 30-some electrodes could be implanted in the brain, receiving inputs from an external camera attached to glasses. "That's what the field of visual prosthetics is: We try to use artificial intelligence (AI) to deliver electrical pulses to certain locations in the brain related to vision. If we send the right signals to these locations, people could see again, at least partially," he says.

In his research to supplant motor disabilities, Eldawlatly has mostly used electroencephalogram (EEG) recording headsets, a non-invasive technology that records brain activity through electrical signals picked up by small sensors attached to the scalp.

In one of Eldawlatly's experiments, people with disabilities were able to write words by selecting letters separated by flickering boxes of varying paces, each of which would elicit a different electrical pulse in the brain.

Once the EEG headset picked up the electrical signals using AI, the computer would produce the correct letter, allowing the person to write using only their brain. In an adjacent project, the EEG detected a spike when the desired character was displayed, providing an alternative system to the flickering boxes. Patients were able to move their wheelchairs using the same technology.

BCI can also be used to enhance daily life for those without disabilities or diseases. Eldawlatly shared the example of an AUC senior project he supervised that was conducted in collaboration with Siemens, enabling emergency braking in vehicles based on brain signals.

"When an emergency-braking situation happens, such as a car in front of us suddenly stopping, many people panic, for say 100 milliseconds, but their brain detects that they need to stop the car even if they don't press the brakes. They hesitate for a moment, and because

of that, an accident might happen," he explains. By detecting the brain pattern corresponding to emergency braking, the car can be stopped, avoiding the accident.

Additionally, Eldawlatly has been developing AI techniques to diagnose the neurodegenerative disease Amyotrophic Lateral Sclerosis (ALS), a fatal illness that is usually diagnosed in the later stages. With machine learning algorithms, Eldawlatly's team is working on identifying abnormal patterns in signals from the spinal cord. "If we can diagnose the disease early on, we can start administering drugs to slow its progress, elongating the life of the patient and preventing them from losing all function," he says.

Field of the Future

Much of Eldawlatly's work may sound aspirational and futuristic, far off from the world we live in now. But BCI is already being used around the world, and there are companies already performing chip implantations in the brains of paralyzed patients seeking mobility, or a simulation of mobility. And while Eldawlatly has not worked on invasive procedures outside of animal testing, he believes that the future lies in both invasive BCI, implemented through the surgical insertion of electrodes in the brain, and noninvasive BCI, through external apparatuses such as EEGs.

This is why he emphasizes the need for strict ethical guidelines around BCI practices. Regarding invasive techniques, Eldawlatly says, "All the work being done in this field of research has to follow strict ethical guidelines. Otherwise, if it falls into the wrong hands, the technology might cause issues. However, the good news is that patients have to undergo surgery first, so they have to agree to it."

"We deliver electrical pulses to certain locations in the brain related to vision. If we send the right signals to these locations, people can see again, at least partially."

He added that, whether surgically invasive or not, brain monitoring raises concerns of privacy, so ethics are always a priority. "In both cases, we're getting information about what the brain is trying to do, and the brain, not our face or fingerprint, is the true representation of our identity. So the data should not be used for anything that the subject does not approve," Eldawlatly says.

Though he works in data analysis and not in hardware development, Eldawlatly says that the technology can soon become accessible, with EEG headsets already available at varying price ranges. "Once the industry turns the research into a product, it becomes a reality. It becomes something that everyone is using," he says, comparing BCI to AI, which was not widely known until ChatGPT became publicly accessible -- even though researchers had been developing AI technology for the past century. "Now everyone is using AI, so the same thing might happen in brain-computer interfaces," he says.